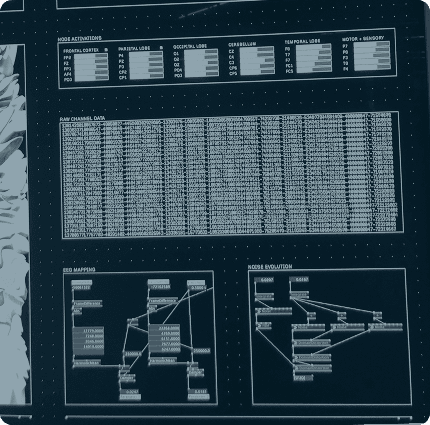

MLOps expertise ensures smooth transitions from development and training to production with automated pipelines handling versioning, monitoring, and scaling.

Multi-Model System deployment and orchestration

Computational Resource Management and Optimization

Optimal GPU Utilization

MLops

# mlops_pipeline.flow

workflow {

build( <automated_pipeline>)

deploy( <trained_models>)

scale( <compute_resources>)

monitor( <production_health>)

}

# deployment.flow

workflow {

deploy( <models>)

optimize( <inference>)

stream( <responses>)

secure( <privacy>)

}

Deploy LLMs effectively across environments, from local hosting for data privacy to hybrid architectures balancing cost, performance, and a restrictive data access policy.

Streaming implementations and inference optimization make real-time AI interactions practical even with resource constraints.

Local hosting - Running 70B parameter models

Inference optimization

Cost optimization

Streaming

Hybrid local/cloud deployment for cost optimization

Privacy-preserving inference without data leaving premises

Match the right model to each task, avoiding the inefficiency of using oversized models for simple problems or undersized ones for complex challenges.

Our systematic approach evaluates task requirements against model capabilities, creating efficient workflows that dynamically route queries to appropriate models based on complexity and required accuracy.

Matching model size to task complexity

Efficient and understandable LLM workflows

Model switching based on query complexity